All of us have heard the statistic that 90%, 88%, 87%, 85% or some crazy percentage of ML models never make it to production. To be quite honest, I have no idea how someone calculated this and I am just as puzzled as this person is. But that is besides the point. Ideally, that number matters much less than why many businesses struggle to derive business value out of Machine Learning- yet!

To understand this, we had 1 hour long conversations with 200+ people in a 1:1 setting to understand the workflow of building ML models which includes- coming up with business requirements, translating it to sprints, data collection, model building, making it production ready, deploying, retraining, A/B testing, debugging, monitoring, observability etc. and close the loop from any step back to any step in this sequence. This includes diverse perspectives from Business leaders, Engineering leaders, Product managers, Data Scientists, Devops, Data engineers & ML Engineers! After these qualitative conversations, we also ran an objective survey to get a broader sense of the prevailing problems whose results can be found here.

While applied Machine Learning is a relatively new field and problems exist in practically every single part of the pipeline, there was one resounding and prominent theme that emerged out of our conversations-

Time lag in and between every step of building and launching ML models, reduces the confidence and morale of engineering & leadership teams killing ML Models!

In the world of software, we are used to taking data driven decisions. We want to see results fast, run A/B tests, justify the costs and if a project doesn’t yield sufficient RoI in a reasonable time- we are taught to shoot it in the head. And time is one thing that ML takes a lot! This is true for companies across the spectrum albeit for different reasons-

- Early-stage startups (seed through Series C)

- Traditional behemoths like Walgreens, Target, Siemens

- Tech giants like Uber, Amazon, Netflix etc.

Let us study this in detail.

Early-stage startups

Often, building and deploying Machine Learning pipelines require varied skillset-

- Understanding business use case — typically Product Managers

- Data logging & processing pipelines — typically Data Engineers

- Model experimentation & building — typically Data Scientists

- Creating Inference APIs & deployment — typically ML Engineers

- Model scaling, staging, prod pipelines — typically DevOps team

- A/B test frameworks, Monitoring & Observability, Retraining Pipelines

Very frequently, startups don’t have the resources to hire different people to fill the entire skillset spectrum. Startups naively believe that they can hire 1–2 really smart people who would have been there done that and can solve all the above problems — but they don’t realise that such mythical creatures don’t really exist outside of the founder’s dreams!

In the end, the data scientist or ML Engineer whoever was hired does try to comply by the common saying- “in a startup, you do whatever it takes to get things done”. But the learning curve of technologies in the space (which is changing rapidly) is really steep and it ends up taking a long time to learn and implement each of these different parts. Naturally, the pipelines built by this newbie is often not fool-proof and starts breaking at different points which starts a sequence of patch-work projects — classic reason for draining engineering resources! At this point, the frustrated founder takes a hard call- “Alright, we are not yet ready for Machine learning- let’s build a simple, easy-to-maintain, rule-based system that just works- back to basics!”

Traditional behemoths like Walgreens & Target

In large companies, the reason why ML Models end up taking too much time, is as much organisational (or even more) as it is technical. Typically, some business user- maybe from a Customer Success or a Sales Team will get off a customer call and come up with an idea for a Machine Learning project. This goes through translations done by Product Managers, resource allocation & sprint planning meetings before it finally lands in the hand of a Data Scientist. Let’s assume this idea was one of the simpler ones where the organisation already had the data to be fed into the model (say user click stream).

The data scientist then builds the model but has no way of getting quick feedback from the business user because-

- The notebook is too technical for business people

- Results stored in excel sheets are not interactive

- Data scientists typically do not have the skillset to quickly host the model and expose an API.

Without the feedback from the business user, how does the DS know-

- Does their model solve for the problem the business user asked for or the intent was lost in translation?

- Are they missing some critical boundary conditions that if missed would make the customer livid?

- Are they using any data sources that might introduce biases in the model or harm some user-segment adversely?

Even if all of the above fall in place- who knows for sure, that the initial hunch of the business user was right? The ultimate feedback would only come from the end-user. But how do you get the model in the hand of the end-user?

Yes, you guessed it correctly- you need to get the engineering & product team involved. There will be resource allocation meetings again, sprint planning and eventually the model will be integrated into the product. This might take months and by that time the business user might realise the priority of the product has changed! And then the dreadful statement comes- “No point investing more resources to this project- let’s not put good money after bad money!”

Tech Giants like Uber & Amazon

Needless to say, these companies are the most ahead of the curve and they very frequently don’t take time in the order of months to launch ML Models to production. But it is not unheard of even with these top companies. And they have a very unique problem in this context.

Very frequently, large tech giants build their own MLOps platform that does everything from building feature stores, to algorithm implementations, to dependency management, to deployment, to model inference and beyond. Building such generic platforms that work for all use cases is hard a hard engineering problem and these systems end up making assumptions of the form-

- These are the most common libraries that we expect data scientists to use

- As long as the Data Scientist use “out-of-box” algorithms, everything works seamlessly but if they want to develop their own model, they will have to do X, Y, & Z steps.

Let’s examine these assumptions and make the case that these assumptions are not practical — ML Algorithms and toolset are constantly improving and cutting edge solutions are what are needed for cutting edge problems that these companies are working on. This means the more the company tries to be ML heavy, the more it will need custom solutions and the more the need for pulling in dedicated engineering resources!

For this very reason, very frequently, data science teams settle with out-of-box solutions which has huge opportunity cost. If the Data Scientist or the company decides to go the harder route i.e. advancing their platform and allowing to build custom advanced solutions- guess what, you have to make a business case, get the right resources, talk to people from many orgs. Whether it results in an improved platform or not, it sure add delays!

And if after a lot of these investments, things don’t work out as planned, when launched to the user- there is a reinforcement to take the easier out-of-box route for the next project.

Conclusion & Next Steps

This theme of ML models taking “too much time” for various reasons came out starkly in all our user conversations.

- A startup that doesn’t have all the human resources and toolkit and doesn’t have too much time to iterate and figure out the optimal solution- settle for rule-based systems when scrapping ML projects!

- Large traditional companies with organisational challenges scrap ML projects because it took too long to come to life and frequently fail to create justifiable business impact.

- Modern tech companies with MLOps platforms optimised for production but not so much for open-ended experimentation end-up settling for “out-of-box” supported algorithms & libraries. Utilising the full potential of Machine Learning will take too much time otherwise.

We were astonished to see this common theme across such broad spectrum of companies-

ML models taking too much time and delayed business impact and feedback cycles is one of the primary reasons why a lot of ML models don’t see the light of the day.

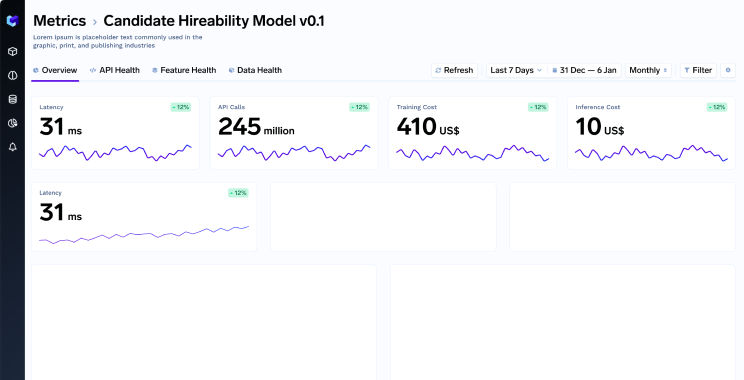

We think that if we can enable a solution that allows for seamless communication between business users & data scientists, quick feedback from the consumers to verify business impact before spending a lot of organisational resources for ML solutions, it will increase the confidence and investment in Machine Learning- and also make those investments much more fruitful.

We don’t claim to have a full solution to the problem yet but we are working on it. We are talking to smart people and figuring out how we can alleviate if not completely solve this problem.

We strongly believe that a problem so big requires diverse perspectives to come together.If you relate with this problem, or have thoughts to share, or have ideas on how to solve the problem or want to hear our ideas we welcome you to comment or come talk to us.

I can be personally reached at- nikunjbjj@gmail.com. And here’s the linkedIn for me and my cofounders-

This blog was first published on Medium at https://medium.com/@nikunjbajaj/time-killed-my-ml-model-48521fad6c4 on Aug 30, 2021

%20(11).png)