Machine learning (ML) is revolutionising various industries and applications, ranging from healthcare and finance to self-driving cars and fraud detection. However, deploying ML systems in production environments is challenging due to various factors, such as technical debt and the lack of production readiness. Technical debt is an ongoing concern for ML Systems and refers to the cumulative cost of design, implementation, and maintenance decisions that are made to deliver software more quickly, with the promise of paying them off later. Any technical debt that accumulates can have significant costs in terms of time, money, and performance. The concept of Technical debt in ML was first proposed in the paper:" Machine Learning: The high-interest credit card of technical debt" by Sculley, Holt et al. in 2o14. Production readiness refers to the set of practices, processes, and technologies that ensure that the ML system is reliable, scalable, maintainable, and secure.

"Technical debt is like a credit card. It's easy to accumulate, but hard to pay off." - Chris Granger.

Evaluating the production readiness and technical debt of an ML system is crucial to ensure that the system can operate effectively and efficiently in production environments. In this blog, we will define a modified ML System Robustness Score, a rubric for evaluating the production readiness and technical debt of ML systems, with insights inspired from the paper: "The ML Test Score: A Rubric for ML Production Readiness and Technical Debt Reduction" by Eric Breck et al. We will explore the different parameters/categories that make up the ML System Robustness Score we formulate and the tests you could perform in each category.

ML System Robustness Score

The ML System Robustness Score aims to provide a comprehensive evaluation framework for ML systems and to identify potential technical debt issues. We break down the Scoring into 6 major categories with 22 sub-categories, which we will dive into below:

- Data Quality and Preparation

- Model Training and Performance

- Model Evaluation and Interpretability

- Model Deployment and Monitoring

- Infrastructure and Operations

- Security and Compliance

Data Quality and Preparation

"We don't have better algorithms. We just have more data."

- Peter Norvig (American Computer Scientist)

The quote from Peter Norvig aptly summarises the importance of data in ML models. The quality of the data used to train and test the ML model has a direct impact on its performance, and it is essential to ensure that the data is relevant, accurate, and representative of the problem domain. Below are the major sub-categories of evaluation:

- Data quality and integrity: Is the data accurate, complete, enough to train the model, and consistent?

- Data privacy and security: Is the data protected against unauthorized access and use?

- Data bias and fairness: Is the data representative and free from bias i.e. diverse enough to represent different scenarios and edge cases?

Model Training and Performance

The importance of model training and performance in achieving the desired outcomes cannot be overstated. The constant evolution of ML models and the increasing size of datasets has led to a growing demand for more powerful hardware to train them. The emergence of Large Language Models (LLMs) has completely changed the game in the field of natural language processing.

To ensure that models continue to perform well, it is crucial to regularly retrain them with new data and build systems that support various types of hardware. By adopting this approach, developers can ensure that the ML models they create are up-to-date, efficient, and capable of handling increasingly complex and larger datasets. The evaluation of model performance can be broken down into several subcategories, including those listed below:

- Model performance metrics: Are the performance metrics aligned with the business requirements?

- Model selection and tuning: Have the appropriate models been selected and fine-tuned?

- Model stability and reproducibility: Is the model stable and reproducible over time?

Model Evaluation and interpretability

Assessing the performance of an ML model against a set of metrics is an integral part of the model evaluation that ensures accurate predictions. On the other hand, model interpretability is equally important as it enables developers and stakeholders to comprehend the model's inner workings and make informed decisions based on its outputs. A lack of interpretability can result in the model being viewed as a "black box," making it difficult to trust its outputs.

To evaluate the model's performance accurately, organisation need to consider several subcategories, including those listed below:

- Model interpretability: Can the model's outputs be easily understood and explained? Is the model transparent and fair?

- Feature importance and contribution: Can the model's features be ranked by importance and contribution?

- Evaluation environment: Is the evaluation data representative of Production data and the environment similar to the production environment?

- Counterfactual explanations: Can the model provide explanations for counterfactual scenarios?

Model Deployment and Monitoring

Effective model deployment and monitoring can help organizations achieve optimal ML test scores and ensure that their models continue to provide value over time. Consider these sub-categories:

- Deployment infrastructure: Is the deployment infrastructure scalable and reliable?

- A/B testing and experimentation: Is the model tested and validated in controlled experiments? Is the model roll-out process smooth to ensure no downtime?

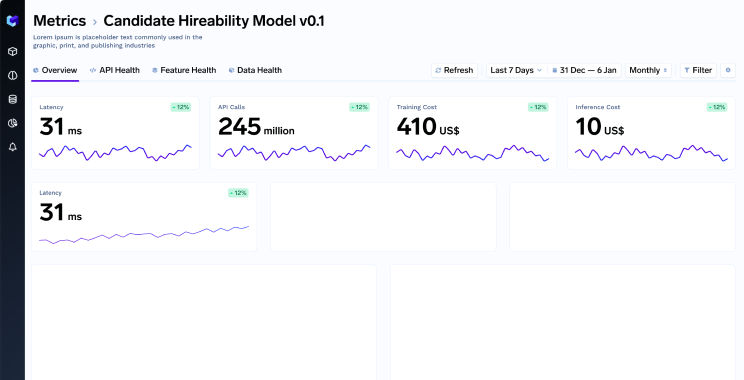

- Monitoring and alerting: Is there a logging infrastructure in place? Are there mechanisms in place to monitor the model's performance and alert when issues arise?

- Model Updation: Does the system automatically Update models as new data and features become available

Infrastructure and Operations

We spoke about infrastructure under the training and performance category; infrastructure not only plays a critical role in ensuring that ML models are trained efficiently and accurately but also in operations. Below are the sub-categories to be considered:

- Resource allocation and optimization: Are the resources allocated and optimized to maximize efficiency and minimize costs?

- Containerization and orchestration: Are the containers and services managed in a scalable and efficient manner?

- Continuous integration and deployment: Are changes to the codebase automatically tested, built, and deployed?

- ROI Measurement: Could you measure the business impact of the ML Model once its serving Production?

Security, Failure Handling, and Compliance

It is the last and one of the most important category, split in to following sub-categories:

- Access control and authorization: Are access controls and authorization policies in place to protect against unauthorized access?

- Compliance and regulatory requirements: Is the system compliant with relevant regulations and requirements?

- Error handling and Recovery: Can the ML System gracefully recover from failures and handle errors due to drift in systems?

- Data protection and encryption: Is sensitive data protected and encrypted in transit and at rest?

Calculating your ML System Robustness Score

For final scoring, a company can use a scoring framework based on a 0-4 scale. The scoring framework is as per the table below.

- A score of <25 means that the ML System is probably not ready and a lot of challenges need to be addressed.

- A score in the 25-40 range would be an indicator that the current system is adequate but might start creating failure points as you scale.

- A score in the 40+ range reflects a solution that is robust and will work as the systems scale.

- Anything beyond 60 would represent a best-in-class solution at your company.

Answering these questions and performing the tests can provide a comprehensive evaluation of the production readiness of an ML system as well as identify potential technical debt issues that may arise during the development and deployment of the ML system. By identifying these issues early on, steps can be taken to mitigate or eliminate them, reducing the overall technical debt of the system.

Evaluating Technical Debt similar to Software Systems

While we use the ML Test Rubrik as a base for the scoring framework above, there are other frameworks for evaluating the readiness of ML systems.

- One old framework is the Approach to software testing of machine learning applications laid down by C Murphy in 2007 that emphasizes the importance of testing and validation throughout the development and deployment of ML systems similar to Software systems. This approach combines traditional software testing methods, such as unit testing and integration testing, with specialised ML testing methods, such as model validation and data validation.

- Another recent framework is proposed in the Technology Readiness Levels (TRLs) for Machine Learning Systems proposed by A Lavin and Lee in Oct 2022. The TRLs provide a systematic and detailed way to assess the maturity and readiness of ML systems, from the concept stage to the operational stage.

Conclusion

In conclusion, evaluating the production readiness and technical debt of ML systems is essential for successful deployment and maintenance. The ML Test Score provides a comprehensive rubric for evaluating these factors, covering aspects such as data quality, model performance, evaluation practices, operations, and monitoring. The TRLs for Machine Learning Systems and other frameworks can also provide complementary assessments of the system's maturity and readiness. Ongoing monitoring and maintenance, as well as thorough testing and validation, are crucial for minimizing technical debt and ensuring that the ML system remains production-ready.

👉

PS: Get a Free Diagnosis of your ML System done!

If you are interested in a diagnosis of your entire ML Infrastructure, write to us at founders@truefoundry.com, and we will send a pre-questionnaire; set up 30 minutes to go over some questions to help us understand the system.

Post that, we will work with you to provide a free diagnosis and benchmarking of your ML System within a week.

References

- C. Murphy, G. E. Kaiser, and M. Arias, “An approach to software testing of machine learning applications.” in SEKE. Citeseer, 2007

- D. Sculley, G. Holt, D. Golovin, E. Davydov, T. Phillips, D. Ebner, V. Chaudhary, and M. Young, “Machine learning: The high interest credit card of technical debt,” in SE4ML: Software Engineering for Machine Learning (NIPS 2014 Workshop), 2014

- A Lavin, C Lee Et Al,"Technology Readiness levels for Machine Learning Systems," in Oct 2022

- Eric Breck, Shanqing Cai, Eric Nielsen, Michael Salib, D. Sculley Google, Inc, "The ML Test Score: A Rubric for ML Production Readiness and Technical Debt Reduction" , 2017

%20(11).png)