In this article, we discuss about deploying Falcon model on your own cloud.

The Technology Innovation Institute in Abu Dhabi has developed Falcon, an innovative series of language models. These models, released under the Apache 2.0 license, represent a significant advancement in the field. Notably, Falcon-40B stands out as a truly open model, surpassing numerous closed-source models in its capabilities. This development brings tremendous opportunities for professionals, enthusiasts, and the industry as it paves the way for various exciting applications.

In this blog post, we will describe LLMOps process on SageMaker - hosting the Falcon model on your own AWS cloud account and the different options available. Furthermore, we plan to release another blog post in the future, focusing on running Falcon on other clouds.

We also wrote another blog on deploying Llama 2 on your cloud. Check below:

Moving on, the Falcon family has two base models: Falcon-40B and Falcon-7B. The 40B parameter model currently tops the charts of the Open LLM Leaderboard, while the 7B model is the best in its weight class. We will be discussing the options for deploying Falcon 40B model.

Falcon-40B requires ~90GB of GPU memory - so this will not fit in a single A100 instance with 80 GB of RAM. The instance type that will work in AWS is g5.12xlarge (https://aws.amazon.com/ec2/instance-types/g5/). We can deploy the model either as an API endpoint for realtime inference or load it in the code itself for batch inference usecases.

The code to load the model and run text-generation task on it is as follows:

# pip install "transformers[tokenizers]>=4.29.2,<5.0.0" # "sentencepiece==0.1.99" "accelerate>=0.19.0,<1.0.0" # "safetensors>=0.3.1,<0.4.0"

import torch

from transformers import pipeline

generator = pipeline(

"text-generation",

model="tiiuae/falcon-40b-instruct",

tokenizer="tiiuae/falcon-40b-instruct",

torch_dtype=torch.bfloat16,

device_map="balanced_low_0",

)

output = generator(

"Explain to me the difference between nuclear fission and fusion.",

min_new_tokens=30,

max_new_tokens=50

)

print(output)

Python code to load Falcon40B in the notebook

Deploying the Model as an API

We can deploy the model as an endpoint either on AWS Sagemaker or EKS cluster or plain EC2 machine. To deploy the model on Sagemaker, you can follow this tutorial: https://aws.amazon.com/blogs/machine-learning/deploy-falcon-40b-with-large-model-inference-dlcs-on-amazon-sagemaker/.

To deploy the model on EKS, we will need to bring up a EKS cluster, setup a GPU nodepool and gpu operator on it, an ingress layer to be able to hit the api endpoint. Truefoundry can make this entire journey much more simpler by making model deployment a one click process.

Cost Analysis

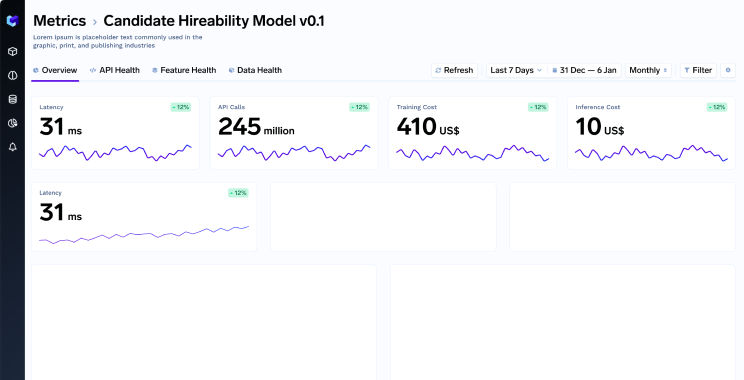

Let's dive into the cost of running Falcon LLM on your own AWS account. We will be comparing the cost of running it on Sagemaker vs TrueFoundry.

Sagemaker Cost

Cost of Sagemaker instance (ml.g5.12xlarge) per hour (us-east-1): $7.09

We ran a quick benchmark to compute the request throughput and latency for falcon model on AWS Jumpstart. The exact numbers will vary based on your prompt lenghts and concurrency of requests, but this should provide a rough idea:

As we can see in the graphs above, the p50 latency is around 5.7 seconds and p90 is around 9.4 seconds. We are able to get a throughput of around 6-7 requests per second.

Deploy the model on EKS using TrueFoundry

TrueFoundry deploys the model on EKS and we can utilize spot and on-demand instances to highly reduce the cost. Let's compare the per-hour on-demand, spot and reserved pricing of g5.12xlarge machine in the us-east-1 region.

On Demand: $5.672 (20% cheaper than Sagemaker)

Spot: $2.076 (70% cheaper than Sagemaker)

1 Yr Reserved: $3.573 (50% cheaper than Sagemaker)

3 Yr Reserved: $2.450 (65% cheaper than Sagemaker)

Let's compare the throughput and latency of the model deployed on EKS using TrueFoundry.

As we can see from the stats above, the p50 latency is 5.8 seconds and p90 is 9.5 seconds. The throughput is around 6-7 requests per second. As we see above, the

Pricing Calculator

Let's try to estimate the cost of hosting the Falcon model for an actual use case with live traffic. Let's consider that we are getting 100K requests a day and we are hitting the Falcon model for every single request. To serve this traffic, 1 instance of g5.2xlarge should be enough to handle the traffic since each instance can do 6 requests per second and 100K requests a day implies 1 request a second. However, for reliability reasons, we will want to run atleast 2 instances. Let compare the cost of running the 2 instances:

Sagemaker: $7.1 * 2 ($ per hour) = $10000 a month

EKS:

Using spot instances: $2 * 2 ($ per hour) = $2880 a month

Using on-demand instances: = $8000 a month

We can also use a combination of 1 spot and 1 on-demand instance to reduce the cost by around 40% and also achieve a high level of reliability.

Chat with us

if you're looking to maximise the returns from your LLM projects and empower your business to leverage AI the right way, we would love to chat and exchange notes.